Continuing on my everlasting theme of live audio/visual synchronisation, I have some new links based around the topic of using Blender - specifically its game engine - as a visuals tool.

I had previously come across the script for Blender called 'Midi_Import_X' (blog for that here). This script doesn't allow for real-time import, but rather using pre-built MIDI sequences and then allows actions to be triggered from each signal when being rendered. So for instance a note might make an eye blink at a certain point in the timeline.

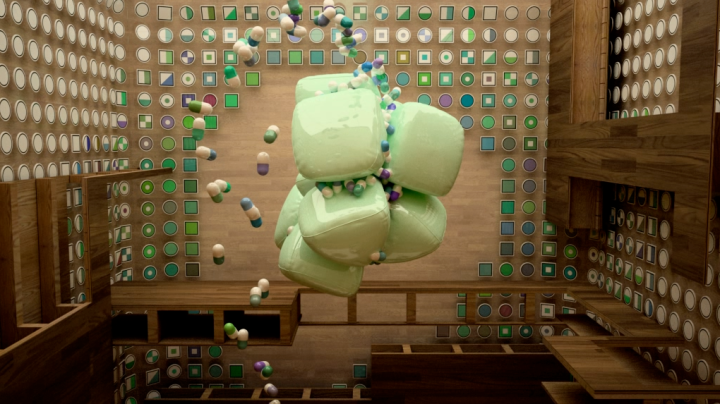

Click on the image below to see a nice example of this.

Now if only this could be done live...

The next example of a script/plug-in is this one - called Pyppet (link for download here). It allows control of certain elements with various input devices (including the Wiimote). However at the current time, MIDI is not mentioned and nor is the Blender game engine. As far as I can tell, it is only for use in the animating stage in linear based movies. If it was to allow for MIDI control in the GE, it would be perfect...

Again, click the image for a video...

All this would aid my quest to create visuals that linked up to sounds perfectly as they were being played. One of my current projects - using the track 'Starter' by Boys Noize - uses a lot of keyframing to hook sounds to visuals. Whilst this process is satisfying when done, it is time consuming, and also linear. It doesn't allow for syncing up to other songs.

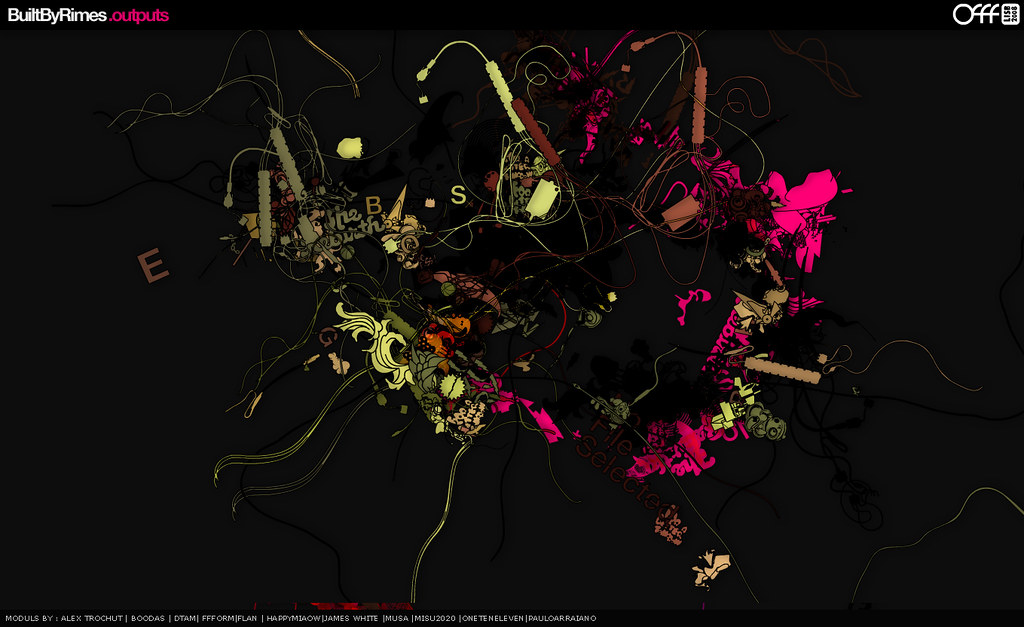

Here is a clip of one of the sections...

Going back to Pyppet, if certain animation actions were saved i.e. like the flashing X in the video, and then applied to controllers, the flashing X could be synced up to this song or indeed any other song that is playing. The only catch with this method is that MIDI sequences would need to be created for all songs that are going to be played live. This isn't really much of a downside however, just a little bit more preparation if the song you are going to be playing is not your own (i.e. like when DJing). If the track is your own and you have the MIDI sequences, this method of visuals would be perfect.

More on this soon I'm sure...

More on this soon I'm sure...